SAFEXPLAIN: From Vision to Reality

AI Robustness & Safety

Explainable AI

Compliance & Standards

Safety Critical Applications

THE CHALLENGE: SAFE AI-BASED CRITICAL SYSTEMS

- Today’s AI allows advanced functions to run on high performance machines, but its “black‑box” decision‑making is still a challenge for automotive, rail, space and other safety‑critical applications where failure or malfunction may result in severe harm.

- Machine- and deep‑learning solutions running on high‑performance hardware enable true autonomy, but until they become explainable, traceable and verifiable, they can’t be trusted in safety-critical systems.

- Each sector enforces its own rigorous safety standards to ensure the technology used is safe (Space- ECSS, Automotive- ISO26262/ ISO21448/ ISO8800, Rail-EN 50126/8), and AI must also meet these functional safety requirements.

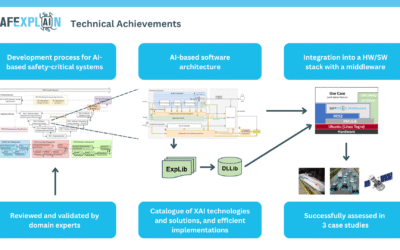

MAKING CERTIFIABLE AI A REALITY

Our next-generation open software platform is designed to make AI explainable, and to make systems where AI is integrated compliant with safety standards. This technology bridges the gap between cutting-edge AI capabilities and the rigorous demands for safety-crtical environments. By joining experts on AI robustness, explainable AI, functional safety and system design, and testing their solutions in safety critical applications in space, automotive and rail domains, we’re making sure we’re contribuiting to trustworthy and reliable AI.

Key activities:

SAFEXPLAIN is enabling the use of AI in safety-critical system by closing the gap between AI capabilities and functional safety requirements.

See SAFEXPLAIN technology in action

CORE DEMO

The Core Demo is built on a flexible skeleton of replaceable building blocks for Interference, Supervision or Diagnoistic components that allow it to be adapted to different secnarios. Full domain-specific demos are available in the technologies page.

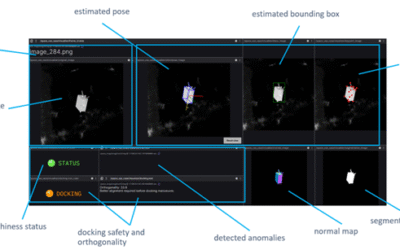

SPACE

Mission autonomy and AI to enable fully autonomous operations during space missions

Specific activities: Identify the target, estimate its pose, and monitor the agent position, to signal potential drifts, sensor faults, etc

Use of AI: Decision ensemble

AUTOMOTIVE

Advanced methods and procedures to enable self-driving carrs to accurately detect road users and predict their trajectory

Specific activities: Validate the system’s capacity to detect pedestrians, issue warnings, and perform emergency braking

Use of AI: Decision Function (mainly visualization oriented)

SAFEEXPLAIN final deliverables now available!

The project's final 11 deliverables are now available. Access the project's final results. The SAFEXPLAIN deliverables provide key details about the project and its final outcomes. The following deliverables have been completed for public dissemination and approved by...

PRESS RELEASE: SAFEXPLAIN delivers final results: Trustworthy AI Framework, tools and validated case studies

After three years of research and collaboration, the EU-funded SAFEXPLAIN project, coordinated by the Barcelona Supercomputing Center-Centro Nacional de Supercomputación (BSC-CNS) has released its final results: a complete, validated and independently assessed...

Final Space Demonstrator Results

The space case study takes place in a scenario with high relevance for the space industry, a spacecraft agent navigating towards another satellite target and attempting a docking manoeuvre. This is a typical situation of an In-Orbit Servicing mission. The case study...

SAFEXPLAIN Railway Demo at Quality Evaluation of ML-based Software Systems 2025

IKERLAN and exida development representatives will present "Alignment and complementarity between AI-FSM and ASPICE MLE: findings from the assessment of the SAFEXPLAIN Railway Demo" at the Quality Evaluation of ML-based Software Systems 2025 workshop within the 26th...

Trustworthy AI in Safety-Critical Systems: Overcoming adoption barriers

Learn how AI can be safely and reliably deployed in high-stakes domains like automotive, rail, aerospace and robotics. Through real-world demonstrations, technical sessions, and open discussions, participants will dive into the latest approaches for making AI systems robust, explainable, and compliant with safety standards.

Register your interest by 8 September to join this event in Barcelona.

Poster presentation at IEEE IOTS 2025

The 2025 International Symposium on On‑Line Testing and Robust System Design (IOLTS2025) from 7-9 July 2025 served as a premier symposium dedicated to advancing reliability, testing, and secure design in electronics and systems. It's a well-established IEEE conference...